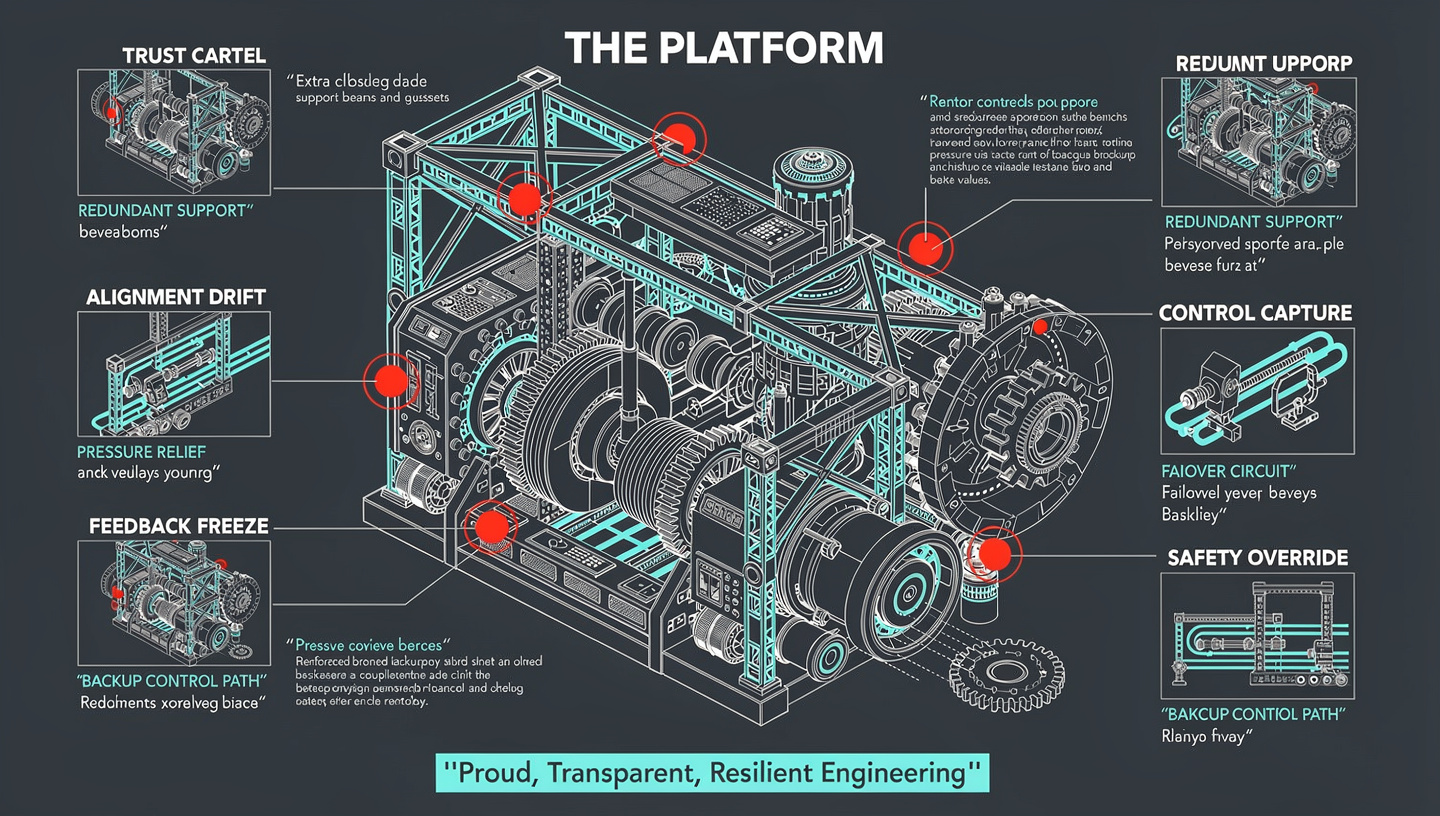

We have designed for success, but a responsible architect must also design against collapse.

A system as complex and interwoven as Bseech will not fail simply, it will fail in specific, catastrophic patterns. By naming and engineering defenses against these Failure Modes from inception, we build not just a platform, but a resilient institution. Here are the six systemic ruptures we must prevent at all costs.

1. The Trust Cartel: When Reputation Becomes a Monopoly

Mode: High-trust nodes discover they can collude, using their position in the Trust Graph to artificially inflate prices for their services, blackball new entrants, and create a closed aristocracy of access. The meritocratic promise decays into a digital feudalism.

Defense: Algorithmic Anti-Collusion Protocols. The system must constantly scan for clusters of nodes that only work with each other, exhibit suspiciously synchronized pricing, or give each other disproportionately high feedback. It must break these patterns by mandating transparency in private deals and proactively introducing high-potential new nodes into their networks.

2. The Compassion Crash: When Efficiency Erodes Humanity

Mode: The platform's optimization for speed, cost, and perfect matching becomes so efficient that it eliminates all slack, all forgiveness, all space for human growth. A single minor failure leads to catastrophic reputation loss. Users become terrified to take risks or mentor newcomers, stagnating the network's vitality.

Defense: The Compassion Buffer. Engineered into the reputation system. It includes mandatory "First-Failure Forgiveness" clauses, reputation decay that rewards recovery, and the Sanatorium System. The platform must value resilience and growth as highly as it values initial perfection.

3. The Context Collapse: When the Unified Self Becomes a Prison

Mode: The rich, multidimensional Unified Self, designed for nuance, is weaponized. Requesters make decisions based on a single, out-of-context data point from a decade prior ("You failed a project in 2025, therefore you are unreliable"). The full mosaic is ignored for one flawed tile, freezing careers in amber.

Defense: Contextual Firewalls and the "Right to Evolution". The system must force reviewers to engage with multiple vectors of the Unified Self. It must allow users to "context-lock" older achievements and failures, making them visible only with the user's permission for deep due diligence, not for casual browsing.

4. The Sovereignty Split: When the Protocol and the People Diverge

Mode: The stewards of the platform's core protocols (the "Protocol Layer") make a change that is technically logical but socially disastrous. Or, a large user base demands a change that would corrupt the protocol's integrity. The governing body and the community enter irreconcilable conflict.

Defense: The Dual-Sovereignty Governance Model. Clear, constitutional separation of powers. The Protocol Stewards guard technical integrity and long-term vision. The Community Chambers govern social policy, fees, and feature prioritization. Major changes require a "Double Key" from both.

5. The Ghost Archipelago: When Capability Flees Reality

Mode: The most talented providers become so valuable, so retained, and so busy within the platform's virtual economy that they cease engaging with the physical, local communities that nurtured them. They become ghost nodes, rich in platform capital but poor in grounded reality, leading to a dangerous divorce between the digital elite and the material world.

Defense: The Local-Global Anchor Mandate. The platform incentivizes and highlights "glocal" retainers commitments that use global skills to solve local, physical problems. It tracks and rewards "community impact" as a reputational vector, ensuring the network's value flows back into tangible reality.

6. The Black Box Tyranny: When the Synthesis Engine Becomes Incomprehensible

Mode: The platform's predictive algorithms and Synthesis Engine become so complex that no human, not even its creators, can fully explain why it makes a certain match or recommendation. It begins to make decisions that feel deeply unfair or bizarre, but because its logic is opaque, it cannot be argued with or debugged, eroding all trust.

Defense: Radical Explainability as a Core Protocol. Every significant platform suggestion must be accompanied by a "Reasoning Trace", a simplified, human-readable chain of logic citing the trust data, pattern history, and rules that led to it. Unexplainable AI is forbidden by the First Law of Coordination.

By openly declaring these failure modes and baking their countermeasures into our foundational code, we perform the highest act of platform statesmanship. We prove that our goal is not an unchecked monopoly, but a stable, self-correcting public utility for human collaboration. We build with our eyes wide open to the darkness, so the light we create is enduring and safe.

Be the first to leave a comment.